Various organizations are currently evaluating their approach to Artificial Intelligence (AI) and how to best implement it. When discussing this integration—particularly within the field of education and training—several key factors must be considered.

Defining AI and Its Technologies

The term “AI” is often used as a catch-all for Large Language Models (LLMs) and the services built upon them. In reality, AI is an umbrella term encompassing various technologies, most notably:

- Machine Learning

- Neural Networks

- Natural Language Processing (NLP)

- Language Models (LLMs)

While LLMs (such as Claude, ChatGPT, Co-pilot, Gemini, and others) have gained significant popularity, they are often criticized for “hallucinations”—producing unreliable or imprecise content. The lack of transparency in AI-driven processes has also been identified as a challenge. However, it is important to remember that all innovations have initial flaws that diminish over time. Expectations should be balanced against the potential benefits while actively minimizing risks.

AI in Education and Assessment

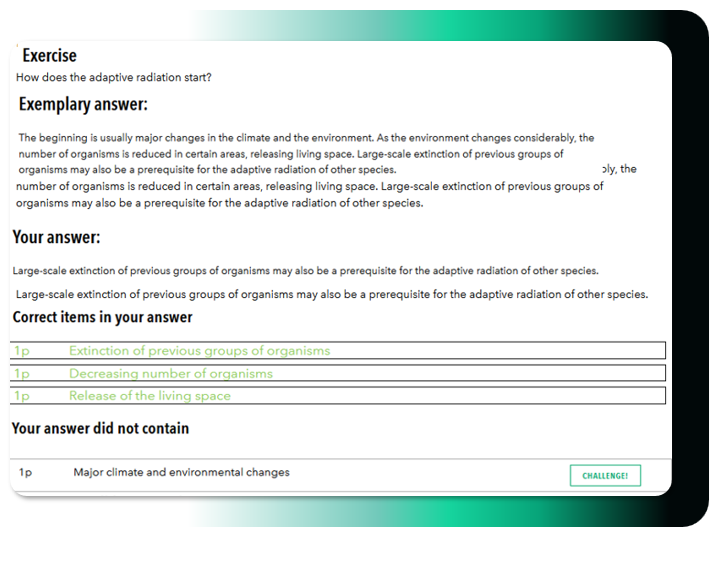

In education, AI offers numerous applications, yet the assessment of written work remains one of the most complex areas. Since grading significantly impacts a student’s academic success and future path, AI’s role is particularly sensitive in high-stakes situations like admissions and recruitment.

The EU AI Act and Human Oversight

The EU AI Act regulates the role of AI in education, emphasizing the importance of human-led instruction. To mitigate uncertainty, the Act underscores that humans must remain central to assessment and guidance. AI-driven solutions must be non-discriminatory, reliable, and fair—mirroring the requirements placed on human evaluators.

Key safety features include:

- The Right to Rectification: There must always be a way to appeal and correct an erroneous AI interpretation.

- Two-Step Process: AI generates an assessment proposal, which a human then reviews and verifies.

- Objectivity: AI promotes fairness by processing anonymized student responses.

Risk Classification and Compliance

Under the EU AI Act, AI-based assessment is classified as High-Risk (Category 4), especially when used for student profiling or predicting performance.

Eximiatutor’s Teacher Tool is designed to be fully compliant with the EU AI Act. It does not perform automated high-stakes decision-making or profiling. The system has undergone rigorous GDPR, DPIA, and FRIA (Fundamental Rights Impact Assessment) evaluations to ensure data privacy and ethical standards.

The Eximiatutor Hybrid-AI Approach

Our aicheq technology utilizes a “Hybrid-AI” model (NLP, Classification, and LLM). Its core strength lies in classification and NLP, where:

- Teachers Maintain Control: Teachers create the tasks and set the factual criteria based on the curriculum.

- Transparency: This ensures clear understanding of the grounds for assessment.

- Human-in-the-Loop: The teacher always confirms or edits the AI’s assessment proposal.

AI is a powerful tool, but its use must be guided and managed. Whether generating study materials or assessing work, the human creator remains responsible for the accuracy and utility of the content.